I’ve already talked about how we need to tap into unleashing our inner toddler by asking “why”. But what questions do we ask?

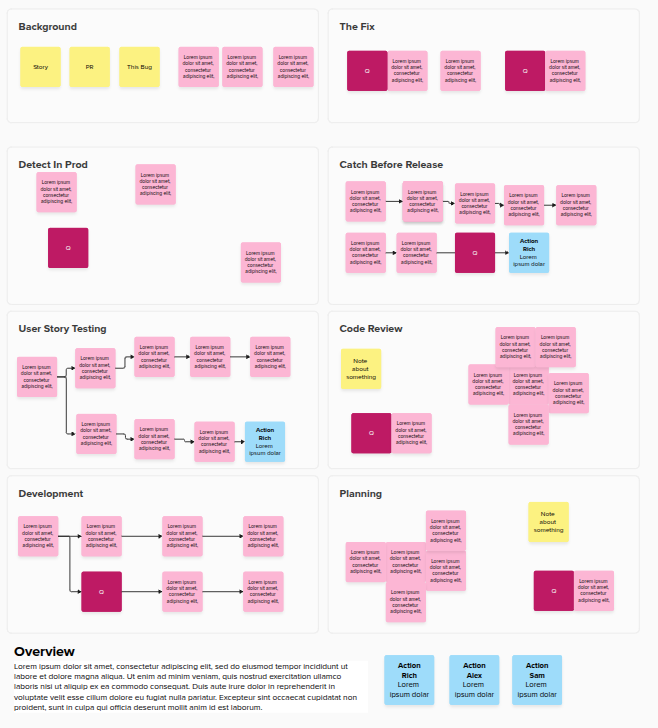

Background

Before getting into the guts of the RCA I like to go through the background. This is partly to act as a refresher for everyone as it may have been a few weeks but also it will help guide me in my questioning.

This usually means sharing:

- Links to the defect we’re RCAing & the original ticket

- Links to PRs to fix the issue and where possible the original (“offending”) PR.

Then asking:

- Can you describe the problematic behaviour? (i.e. what was actually wrong from a user’s point of view)

- Can you describe the describe the nature of the code fix?

- What do you remember from working on the story?

- How long did it take?

- How many people were involved?

The Fix

Before learning more about why the issue came to be, let’s make sure that we’re confident in the fix. I like to ask two questions here:

- How resilient is the fix?

- Will we know if the behaviour regresses again? (i.e. did you add automated tests)

Quality Engineering Throughout The SDLC

Now we get into the real important questions. This is where we go through the software development life cycle and think about what we did and whether there were opportunities to (realistically) catch it then.

First of all, if this was an escape, lets ask if we could have caught it in production (e.g. monitoring), release testing or epic close off testing. I wouldn’t advocate for just asking “could have have caught it here?” but asking around what the process is, what was the testing performed and is this something in the scope of what we’d usually test?

We then move on to the story within the sprint, starting with testing of the original story / bug. We’re trying to understand whether this was a brain fart (it happens) or is it just something that we wouldn’t usually consider testing? If not, why not?

Then we get into more technical. We’re looking at the PR, starting with code review. I’ll be asking about the nature of the bug and is that something that we’d look for? I’d want to understand whether SMEs were involved & if not, why not? Did they check the testing notes & automated tests in the code review? Code reviews aren’t ever going to catch everything but it is good to discuss this process. It is a nice chance for people to get to talk about the value and role of a code review too.

I then concentrate on the developer’s testing. What had they covered through automated and hands on tests? How much was iterative? As a former dev, I know all too well how even a well intended developer who tests their work can let things come through here (see dev BLISS).

We’re back then to technical discussions on the code. This is where I hope the architect can ask a few questions, although regularly other team members often chip in. This discussion is a great way for the team to learn from each other.

You might think that now that we’ve talked about the types of testing and the development challenges that we may stop there, but no we don’t!

The teams will have planning and refinement when we’re breaking down the story. We do test strategies and planning at epic and sometimes user story level. We think about the complexity of the code work with architectural studies before starting an epic. Let’s continue diving into these.

Again we’re asking about what was done, whether this is a scenario that could have been caught, either behaviour wise or in code, and tapping into what more we could have done. This helps us with spread left.

A Parting Question

Near the start I asked about our confidence in catching this issue again. Unless we’re running out of time (unfortunately often), I like to ask a similar but slightly wider question. How confident are we that we won’t see a repeat of the issue? Not necessarily the same issue but a similar one.

Summary Section

Finally I’ll have a summary section with actions, learnings and a summary of the RCA. Often written up afterwards because unsurprisingly the hour I book for RCAs isn’t always enough to cover everything in this post! I’ll explain a little more on this in a separate post.

So in short…

We start off by discussing the background of the story to refresh ourselves and help us get an idea on what threads are best to pull on as we go into things. We’ll also check we’re confident in the fix.

We then take our time going through the SDLC. We’re not just asking “could we have caught it?” or “why didn’t we catch it?” but looking at the actions, steps and processes to understand the answer to this.

I switched the ordering from starting with the first stages of the story to starting in prod after advice from a great chap called Stu Ashman. I found this got us much more engagement in some of the testing and activities around post release. You’ll also see how through the different stages we are asking slightly different questions to consider more than “why didn’t we catch it?”.

We’re using every stage as a learning opportunity.

… and that makes for a meaningful RCA!